Conveying Emotions Through Device-Initiated Touch

People involved

Marc Teyssier

Gilles Bailly

Catherine Pelachaud

Eric Lecolinet

Abstract

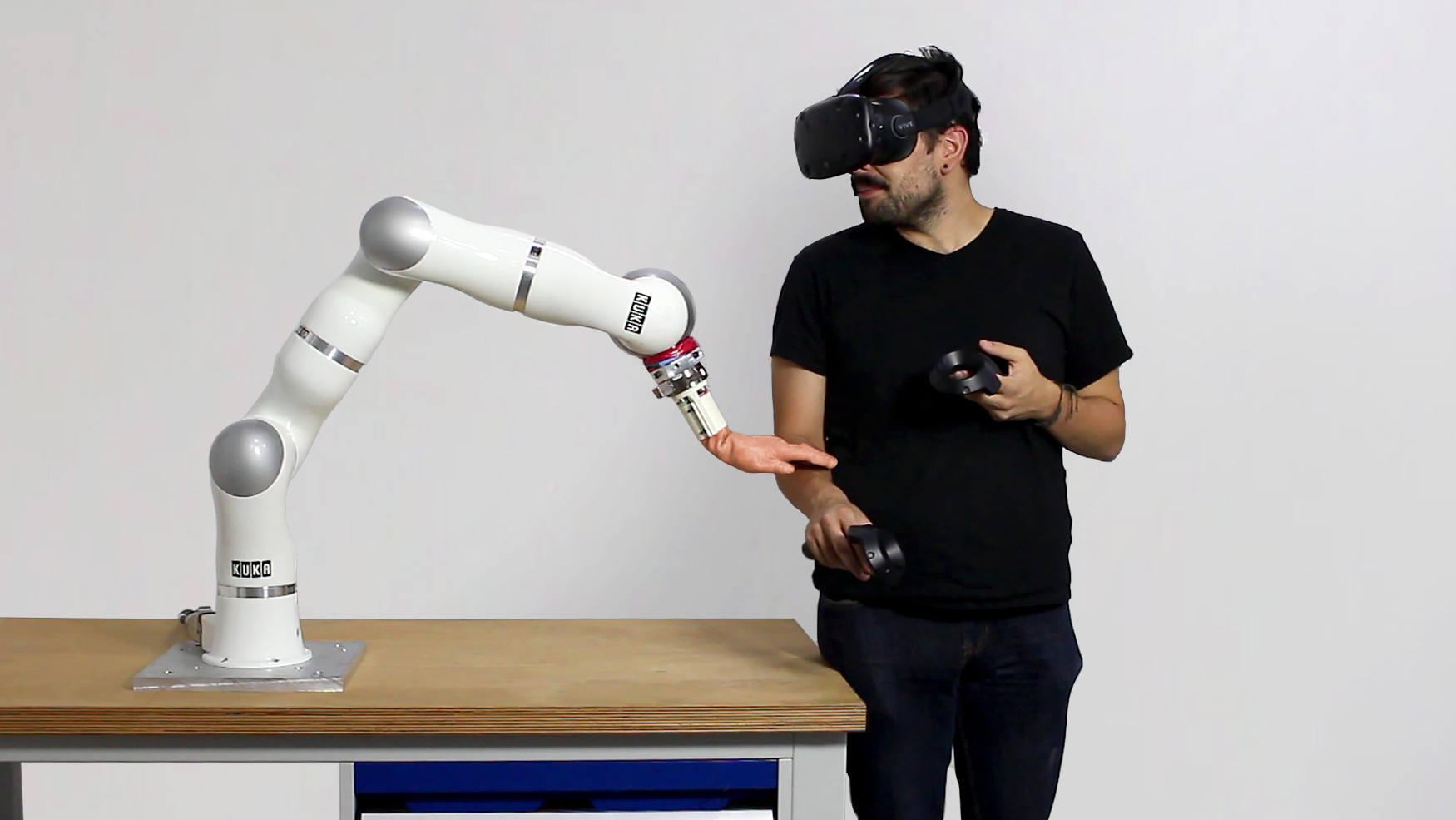

Humans have the ability to convey an array of emotions through complex and rich touch gestures. However, it is not clear how these touch gestures can be reproduced through interactive systems and devices in a remote mediated communication context. In this paper, we explore the design space of device-initiated touch for conveying emotions with an interactive system reproducing a collection of human touch characteristics. For this purpose, we control a robotic arm to touch the forearm of participants with different force, velocity and amplitude characteristics to simulate human touch. In view of adding touch as an emotional modality in human-machine interaction, we have conducted studies into three steps. After designing the touch device, we explore touch in a context-free setup and then in a controlled context defined by textual scenarios and emotional facial expressions of a virtual agent. Our results suggest that certain combinations of touch characteristics are associated with the perception of different degrees of valence and of arousal. Moreover, in the case of non-congruent mixed signals (touch, facial expression, textual scenario) not conveying a priori the same emotion, the message conveyed by touch seems to prevail over the ones displayed by the visual and textual signals.

Project description

Full page project here: https://marcteyssier.com/projects/robot-touch/