27/06/2024 - Invited speaker: Panos MAVROS

Title: Cognitive and affective dimensions of pedestrian movement in cities

Abstract: Navigation is complex cognitive process that recruits perceptual cognitive and affective processes. Pedestrian navigation is particularly interesting because our choices have clear physical implications (effort, […]

04/04/2023 - 📊 IHM ’23 – Three presentations and a Demo

The HCI Sorbonne group have presenteed three papers at the French conference in Human-Computer Interacion (IHM’23 https://ihm2023.afihm.org):

Sungeelee, Loriette, Sigaud, Caramiaux. Co-Apprentissage Humain-Machine: Cas d’Étude en Acquisition de Compétences Motrices. IHM’23, article

https://hal.science/IHM-2023/hal-04014981v1

Ferrier-Barbut, Avellino, Vitrani, […]

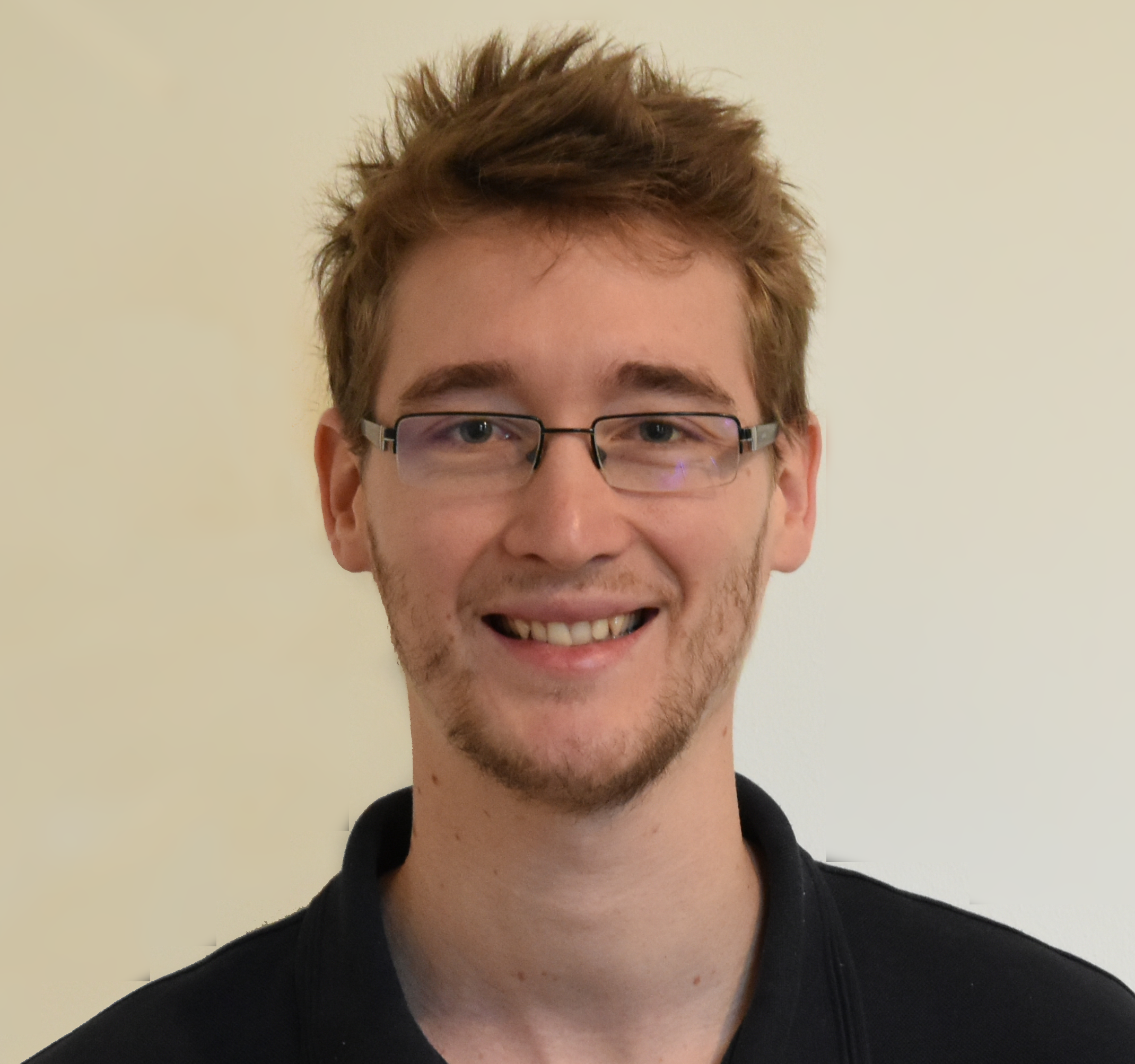

14/12/2022 - PhD Defense | Flavien Lebrun

Flavien Lebrun, supervised by Gilles Bailly and Sinan Haliyo, will defend his thesis entitled “Study of Visuo-Haptic Illusions in Virtual Reality: Understanding and Predicting Illusion Detection” on December 14, 2022.

12/12/2022 - PhD Defense | Oleksandra Vereschak

Oleksandra Vereschak, supervised by Gilles Bailly and Baptiste Caramiaux, will defend her thesis entitled “Understanding Human-AI Trust in the Context of Decision Making through the Lenses of Academia and Industry: Definitions, Factors, and Evaluation” on […]

07/12/2022 - PhD Defense | Benoît Geslain

Benoît Geslain, supervised by Gilles Bailly and Sinan Haliyo, will defend his thesis entitled “Visuo-haptic Illusions in Virtual Reality Design and Learning” on December 07, 2022.

20/06/2022 - PhD Defense | Téo Sanchez

Téo Sanchez, supervised by Baptiste Caramiaux and Wendy E. Mackay, will defend his thesis entitled “Interactive Machine Teaching with and for novices” on June 20, 2022.

Link to the live stream: [here]

Link to […]

20/10/2021 - PhD Defense | Elodie Bouzbib

Elodie Bouzbib, supervised by Gilles Bailly and Pascal Frey, will defend her thesis entitled “Robotised Tangible User Interface for Multimodal Interactions in VR: Anticipating Intentions to Physically Encounter the User” on Oct 20, 2021.

15/09/2021 - Jobs

We are continuously looking for excellent interns, PhD students, Postdocs and engineers in the following areas:

HCI & Cognitive Neurosciences: User modelling; Cognitive control; Skill acquisition; Role of practice; Decision-making; Behavioral change; Cognitive bais; Computational Rationality

HCI […]

02/09/2021 - Award | INTERACT 2021

Flavien Lebrun, PhD student, won IFIP TC13 Pioneers’ Award for Best Doctoral Student Paper for the paper: “A Trajectory Model for Desktop-Scale Hand Redirection in Virtual Reality” (Interact’21) with Gilles Bailly and Sinan Haliyo.

Springer […]

20/10/2020 - Publication | CoVR accepted at UIST’20

CoVR is a robotic interface that provides large force-feedback to the users in Virtual Reality. It consists of a XY-Cartesian robot mounted over the ceiling, which moves accordingly with the users’ intentions, in order to […]

06/02/2020 - PhD Defense | Marc Teyssier

Marc Teyssier, supervised by Eric Lecolinet, Gilles Bailly, and Catherine Pelachaud, will defend his thesis entitled “Anthropomorphic Devices for Affective Touch Communication” on Feb 6, 2020.

15/11/2019 - Guest Talk: Lonni Besançon

Title: Automatic Visual Censoring: from Science-Fiction to Reality

Who: Lonni Besançon

Where: Sorbonne Université, Campus Jussieu, Room H20 (Pyramid)

When: November, 15. 16:00

Abstract: We present the first empirical study on using color manipulation and stylization to make surgery […]

04/06/2019 - Guest Talk: Jan Gugenheimer from Ulm University

Title: Social HMDs: Designing Mixed Reality Technology to Fit into the Social Fabric of our Daily Lives

Speaker: Jan Gugenheimer

When – Where: Sorbonne Université, Room 304. Tuesday, June 4th.

Abstract:Technological advancements in the fields […]

13/03/2019 - Guest Talk: Alvaro Cassinelli

Experiments on alternative locomotion techniques for micro-robots

Where: Room 304 (Campus Jussieu)

When: 2:00PM

Host: Nicolas Bredeche

In this informal talk I will describe some experiments on alternative

methods of locomotion for micro or macro objects not using wheels or

other […]

12/12/2018 - Guest Talk: Anne Roudaut from University of Bristol

When: December 12th, 10AM

Where: Room H20, Pyramide (ISIR), Campus Jussieu, Sorbonne Université

Title: Toward Highly Reconfigurable Interactive Devices

Abstract: The static shape of computers is the bottleneck of today’s interactive systems. I argue that we need shape-changing computers that […]

19/10/2018 - PhD Defense – Emeline Brulé

When: October 19th, 2018, 9:00 AM

Where: Paris Telecom

Title: Understanding the experiences of schooling of visually impaired children: A French ethnographic and design inquiry.

Jury:

Madeleine Akrich, DR, CSI, Mines ParisTech, CNRS i3, PSL University (rapporteur)

Gilles Bailly, CR […]

30/05/2018 - Guest Talk: Edward Lank from the University of Waterloo

When: Wednesday, May 30th, 11:00 AM

Where: Sorbonne Université, Campus Jussieu, Room 211 (second floor) Tower 55-65

Title: WRiST: Wearables for Rich, Subtle, and Transient Interactions in Ubiquitous Environments

Speaker: Edward Lank

Host: Gilles Bailly

Abstract: Modern personal computing devices – tablets, smartphones, […]

16/05/2018 - Guest talk: Alix Goguey from Swansea University

When: May 16th, 2018, 11:00 AM

Where: Sorbonne Université, Campus Jussieu, Pyramid H20.

Title: Augmenting Touch Expressivity to Improve the Touch Modality

Abstract: During the last decades, touch surfaces have become more and more ubiquitous. Whether on tablets, […]

31/10/2017 - UEIS 2017: New Trends in User Expertise and Interactive Systems

UEIS 2017 is the first International Symposium on User Expertise and Interactive Systems. UEIS is a place where researchers and practitioners from Human-Computer Interaction, Cognitive Science, Experimental psychology discuss the latest trends in command selection and user expertise on PCs, tablets, […]

31/05/2017 - Guest Talk: Justin Mathew from INRIA

Title: Interaction techniques for 3D audio production and mixing

Where: ISIR Lab, Room H20

When: May 31, 4:00PM

Abstract: There has been a significant interest in providing immersive listening experiences for a variety of applications, and […]