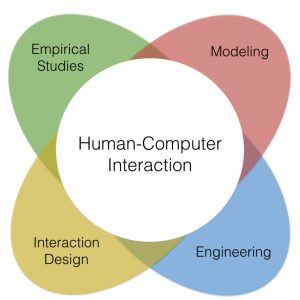

The goal of our group is to understand how people perceive and interact with information and technologies and how to augment technologies to increase users’ expertise and to support their cognition. A central aspect of our group is to use and combine multiple approaches to address HCI challenges in a wide range of applications.

Approach

Empirical Studies serve to understand human perception and behavior or to validate hypotheses, models, or prototypes. We consider both qualitative and quantitative experiments in controlled environments as well as in the field.

Models serve to synthesize complex phenomena to provide theoretical foundations which can then guide the design of interactive systems. We consider both descriptive models (taxonomy) and predictive models (behavioral and cognitive models).

Interaction Design serves to explore the scientific design space of interaction and visualization techniques. We design, implement, and evaluate both hardware and software solutions for goals such as improving performance, facilitating the transition from novice to expert behaviors, or leveraging users’ cognition.

Engineering. Our experience has shown the need of developing tools for both HCI researchers and designers to create and study interaction techniques.

Applications

As illustrated by our previous research projects presented below, we consider a wide range of different applications using existing, emerging and future technologies for desktop as well as beyond desktop interaction featuring mobile devices, wearable devices, tangible interaction, augmented reality (AR), virtual reality (VR), large displays, or shape-changing interfaces.

Research Projects

2023 – Machine Learning for Musical Expression

2023 – Variance Profiles

2023 – Surgical Teleconsulting Through ARHMDs

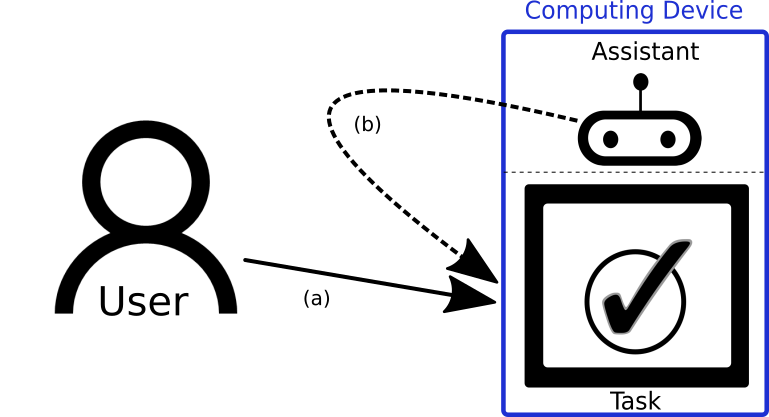

2023 – Cooperative Interfaces

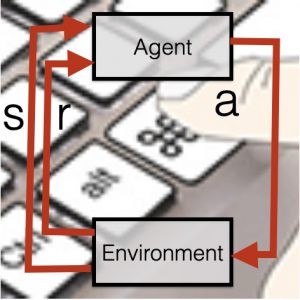

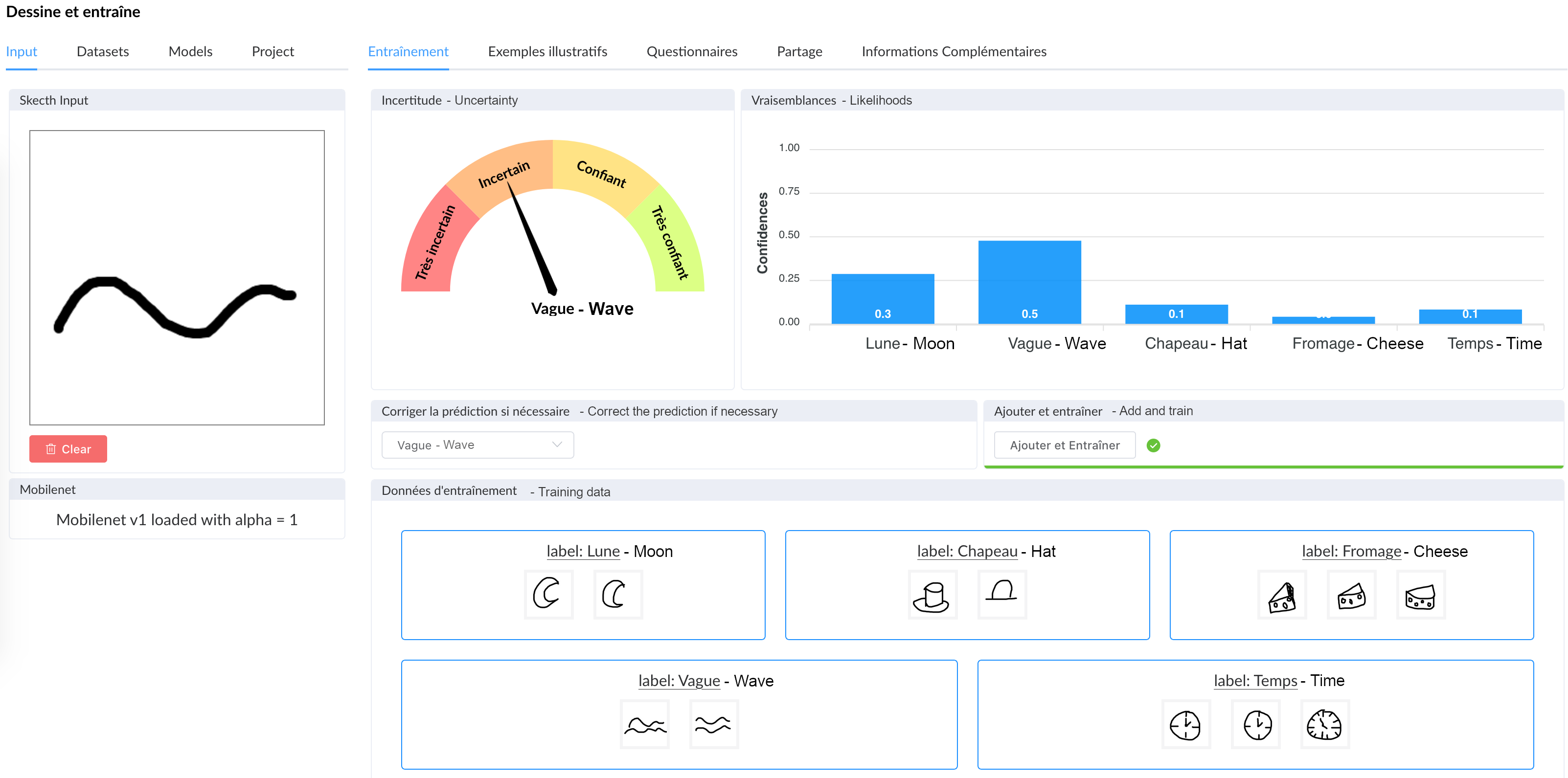

2023 – Co-Apprentissage Humain-Machine

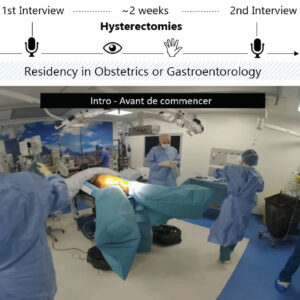

2023 – Surgical Training Through Videos

2022 – Capturing Approaches in Shared Fabrication

2021 – Model of the Transition to Shortcuts

2021 – Practices and Politics of Artificial Intelligence in Visual Arts

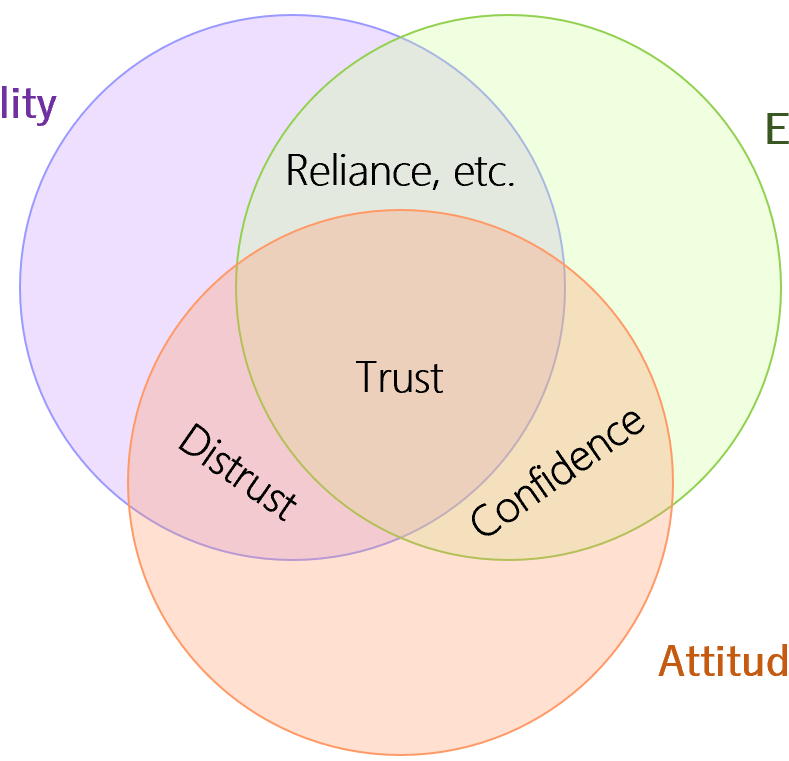

2021 – How to Evaluate Trust in AI-Assisted Decision Making?

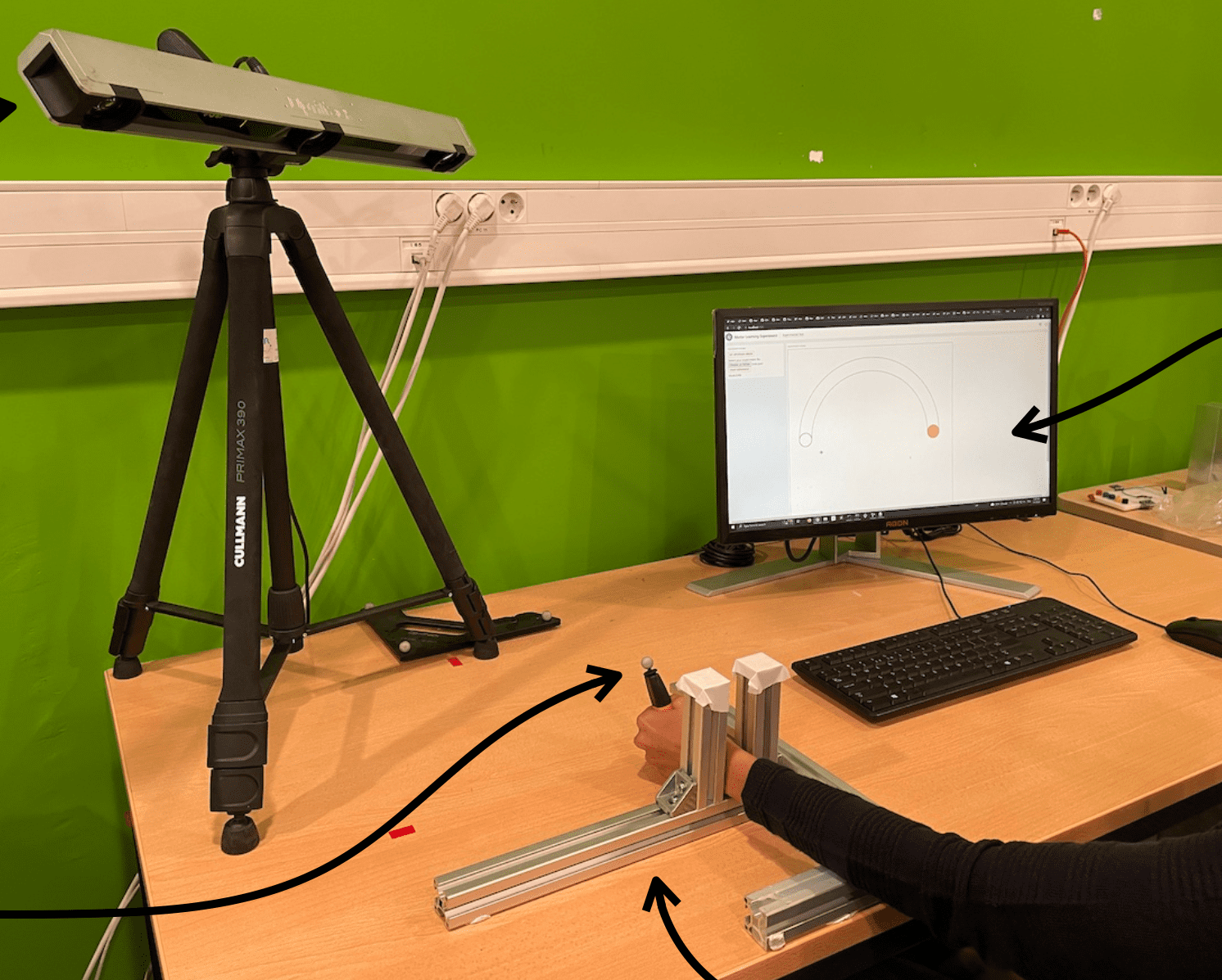

2021 – Visuo-haptic illusions in VR

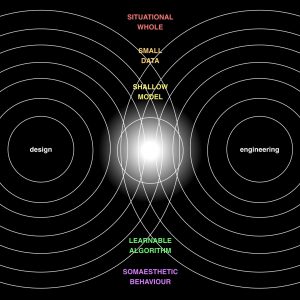

2021 – Diffractive ML Prototyping

2021 – Adaptive Interfaces

2021 – “Can I Touch This?”

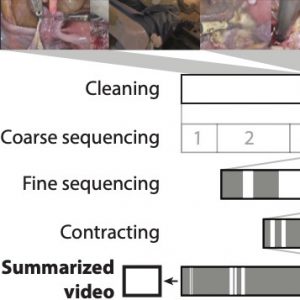

2021 – Surgical Video Summarization

2021 – How do People Train a Machine?

2020 – Social Touch

2020 – CoVR

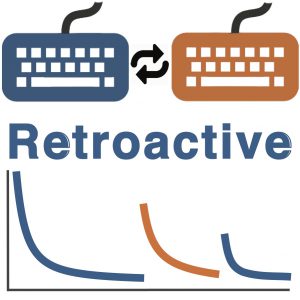

2020 – Retroactive Transfer

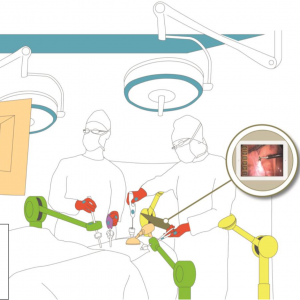

2020 – Multimodal and Mixed Control of Robotic Endoscopes

2019 – Impacts of Telemanipulation in Robotic Assisted Surgery

2019 – Skin-On Interfaces

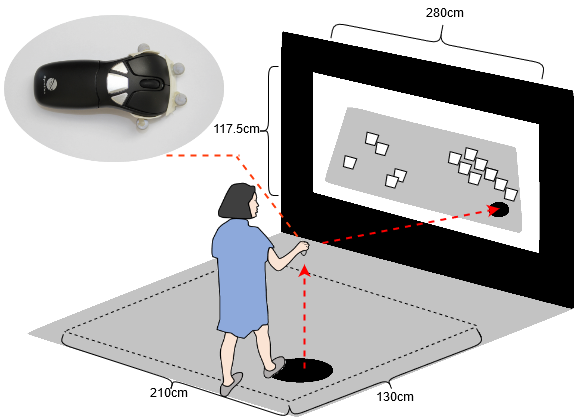

2019 – Locomotion and Overview

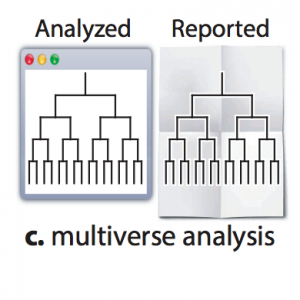

2019 – Explorable Multiverse Analyses

2019 – Mitigating the Attraction Effect with Visualizations

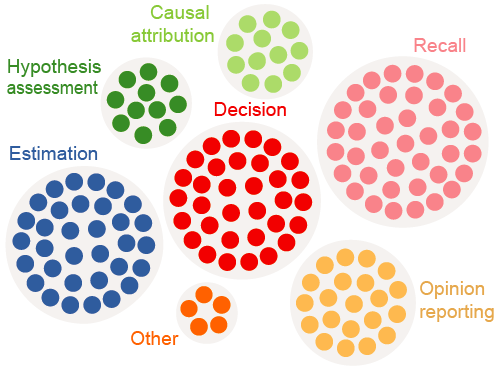

2019 – Cognitive Biases

2018 – MobiLimb

2017 – VersaPen

2017 – Embedded Vis

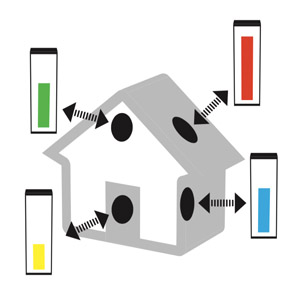

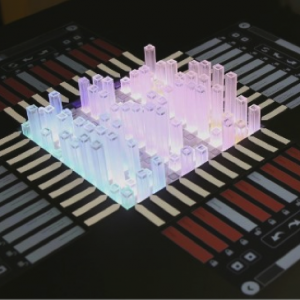

2017 – Dynamic physical data

2016 – TouchToken

2016 – LivingDesktop

2016 – MapSense

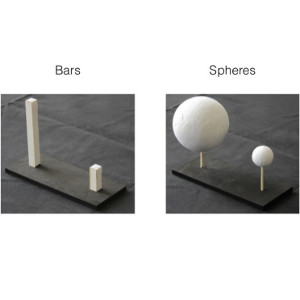

2016 – Size perception

2015 – ISkin

2015 – Data Physicalization

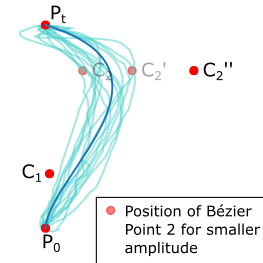

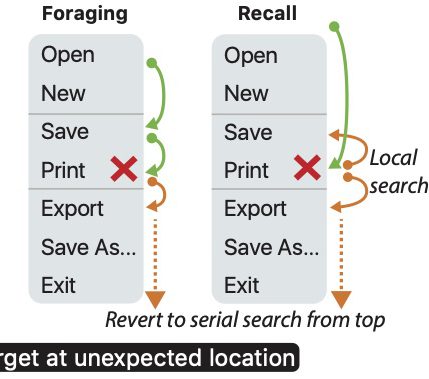

2014 – Menu Modeling

2013 – Keyboard shortcuts

2012 – Finger-Count Interaction